2層のニューラルネットをPythonで定義する.

import numpy as np

class NeuralNetwork:

def __init__(self, input_size, hidden_size, output_size):

# 重みとバイアスの初期化

self.weights_input_hidden = np.random.rand(input_size, hidden_size)

self.bias_input_hidden = np.random.rand(1, hidden_size)

self.weights_hidden_output = np.random.rand(hidden_size, output_size)

self.bias_hidden_output = np.random.rand(1, output_size)

def sigmoid(self, x):

# シグモイド活性化関数

return 1 / (1 + np.exp(-x))

def sigmoid_derivative(self, x):

# シグモイド関数の導関数

return x * (1 - x)

def forward(self, inputs):

# 入力層から隠れ層への伝播

hidden_inputs = np.dot(inputs, self.weights_input_hidden) + self.bias_input_hidden

hidden_outputs = self.sigmoid(hidden_inputs)

# 隠れ層から出力層への伝播

final_inputs = np.dot(hidden_outputs, self.weights_hidden_output) + self.bias_hidden_output

final_outputs = self.sigmoid(final_inputs)

return final_outputs

def train(self, inputs, targets, learning_rate):

# 順伝播

hidden_inputs = np.dot(inputs, self.weights_input_hidden) + self.bias_input_hidden

hidden_outputs = self.sigmoid(hidden_inputs)

final_inputs = np.dot(hidden_outputs, self.weights_hidden_output) + self.bias_hidden_output

final_outputs = self.sigmoid(final_inputs)

# 出力層の誤差

output_errors = targets - final_outputs

# 出力層から隠れ層への逆伝播

hidden_errors = np.dot(output_errors, self.weights_hidden_output.T)

# 誤差に対する重みの勾配

output_gradient = output_errors * self.sigmoid_derivative(final_outputs)

hidden_gradient = hidden_errors * self.sigmoid_derivative(hidden_outputs)

# 重みとバイアスの更新

self.weights_hidden_output += np.dot(hidden_outputs.T, output_gradient) * learning_rate

self.bias_hidden_output += np.sum(output_gradient, axis=0, keepdims=True) * learning_rate

self.weights_input_hidden += np.dot(inputs.T, hidden_gradient) * learning_rate

self.bias_input_hidden += np.sum(hidden_gradient, axis=0, keepdims=True) * learning_rate

# ニューラルネットワークのインスタンス化

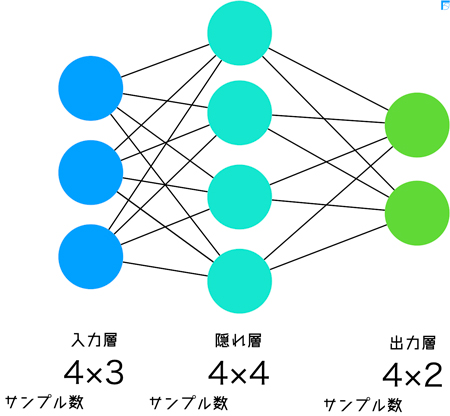

input_size = 3

hidden_size = 4

output_size = 2

learning_rate = 0.1

nn = NeuralNetwork(input_size, hidden_size, output_size)

# ダミーの入力データとターゲットデータの生成

inputs = np.array([[0, 0, 1],

[1, 1, 1],

[1, 0, 1],

[0, 1, 1]])

targets = np.array([[0, 1],

[1, 0],

[1, 0],

[0, 1]])

# 訓練

for epoch in range(10000):

nn.train(inputs, targets, learning_rate)

# 新しいデータでの予測

new_data = np.array([[1, 0, 0]])

predicted_output = nn.forward(new_data)

print("Predicted Output:", predicted_output)

出力は次のようになる.

Predicted Output: [[0.99036751 0.00946958]]

ここで用いられた行列の値は次のようになる.

Weights (Input to Hidden):

[[0.86559079 0.35543461 0.98290313 0.63647904]

[0.24819431 0.26436123 0.84597465 0.94019566]

[0.67297261 0.43592189 0.127277 0.37049044]]

Bias (Input to Hidden):

[[0.10011356 0.7652955 0.20811188 0.8234742 ]]

Weights (Hidden to Output):

[[0.06777084 0.38948834]

[0.24392602 0.34049731]

[0.53378961 0.09676239]

[0.6962991 0.53805222]]

Bias (Hidden to Output):

[[0.85133557 0.34678493]]

訓練後の行列の値は以下の通り.

Trained Weights (Input to Hidden):

[[ 9.55904228 -8.06777488 -6.38659746 9.20550939]

[-0.16364794 0.19104817 0.25018966 -0.14233093]

[-2.02104794 1.68517317 1.51901674 -1.82576135]]

Trained Bias (Input to Hidden):

[[-2.58297613 2.15517165 1.44218629 -2.61282293]]

Trained Weights (Hidden to Output):

[[ 3.43550539 -3.05135711]

[-1.63683914 2.43330975]

[-1.27509865 1.14375031]

[ 2.9603573 -2.71384294]]

Trained Bias (Hidden to Output):

[[-1.74223759 1.09382922]]

Mathematics is the language with which God has written the universe.